15 Minute Read

A/B Testing in Web Design

Optimizing User Experience

Web design is an essential aspect of creating a user-friendly and effective online presence. It's not just about aesthetics; it also impacts the user experience, which, in turn, affects your website's performance. A well-designed website can attract and retain visitors, encourage them to engage with your content, and ultimately convert them into customers or loyal users.

One way to optimize your website's design and user experience is through A/B testing, a method that allows you to compare two versions of a web page and determine which one performs better. This practice has become increasingly popular among web designers, marketers, and businesses aiming to enhance their online presence and, ultimately, their conversion rates.

In this guide, I'll explores the concept of A/B testing in web design, its importance, key elements, the A/B testing process, tools and software, best practices, and provides some case studies to illustrate its benefits.

Let's dive in!

Table of Contents

- What is A/B Testing?

- Why is A/B Testing Important in Web Design?

- Key Elements of A/B Testing in Web Design

- A/B Testing Process

- Tools and Software for A/B Testing

- Best Practices for A/B Testing in Web Design

- Case Studies

What is A/B Testing

Definition and Purpose

A/B testing, also known as split testing, is a method used to compare two versions of a web page or an element on a page to determine which one performs better. The primary goal of A/B testing is to optimize user experience and achieve specific business objectives, such as increasing conversion rates, click-through rates, or user engagement.

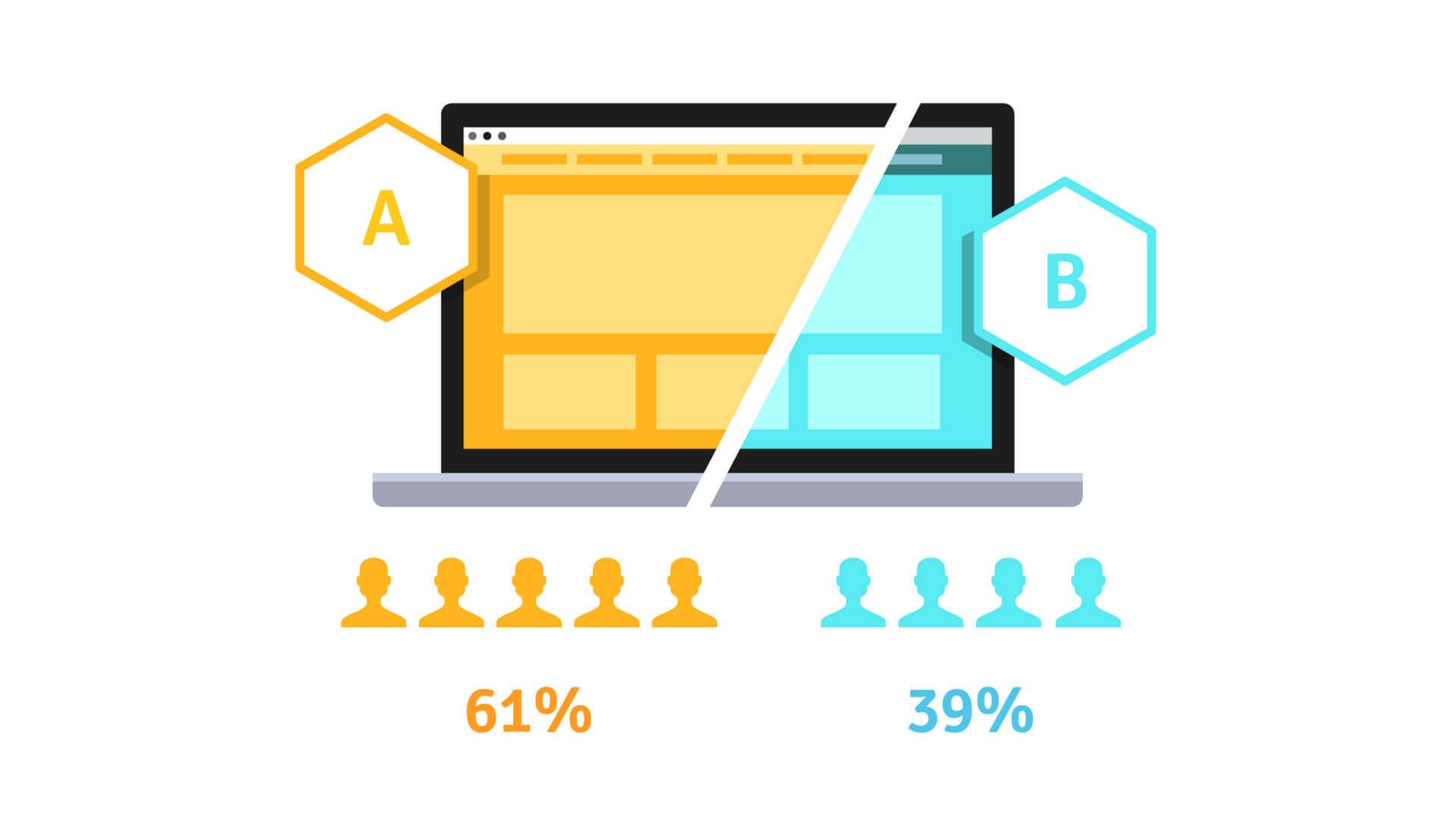

In A/B testing, you create two variations of a web page or design element: Version A (the control) and Version B (the variant). These variations are shown to different segments of your website's audience, and you collect data on how each version performs in terms of your defined objectives. The variation that yields better results is then implemented as the primary design.

For example, you could create a call-to-action element with a specific type of typography, color, and layout design and place it in your home page header section for a period of 90 days. After that, you would create a different call-to-action element with a specific type of typography, color, and layout design and place it in your home page header section for a period of 90 days. Once the the 180 days have passed by, you can determine which call-to-action design element worked best.

Why is A/B Testing Important in Web Design

A/B testing plays a crucial role in web design for several reasons:

Benefits of A/B Testing

- Data-Driven Decisions: A/B testing allows you to make user-centered design decisions based on actual user behavior and preferences rather than relying on assumptions or intuition.

- Improved User Experience: By testing different design elements, layouts, and content variations, you can identify and implement changes that enhance the user experience and make your website more user-friendly.

- Increased Conversions: A/B testing helps you fine-tune your design to maximize conversion rates, whether it's for sign-ups, sales, or other desired actions on your website.

- Reduced Bounce Rates: A well-designed website, informed by A/B testing, can reduce bounce rates and encourage visitors to explore more of your content.

- Enhanced SEO: A website that provides a better user experience can lead to higher search engine rankings, as search engines like Google consider user engagement and satisfaction as ranking factors.

- Competitive Advantage: A/B testing allows you to stay ahead of your competitors by continually optimizing your website and adapting to changing user preferences.

Key Elements of A/B Testing in Web Design

A successful A/B test in web design involves several key elements that need to be carefully considered and implemented:

Hypothesis

- Definition: A hypothesis is a clear and testable statement that outlines what you expect to happen when you make changes to your web design.

- Importance: A well-defined hypothesis provides direction for your A/B test. It helps you determine what specific changes you want to test and what impact you expect them to have on user behavior.

Variations

- Definition: Variations are the different versions of your web page or design element that you are testing. Typically, you have a control version (Version A) and a variant version (Version B).

- Importance: Variations allow you to test specific design changes or elements, such as different button colors, layout adjustments, or content variations, to see which one performs better.

Randomization

- Definition: Randomization is the process of assigning visitors to the control and variant groups randomly to ensure that the test results are not biased by user characteristics or behavior.

- Importance: Randomization helps ensure that your test results are statistically valid and reliable. It minimizes the risk of factors like user demographics or behavior skewing the results.

Testing Duration

- Definition: Testing duration refers to the length of time you run your A/B test. The duration may vary depending on the type of changes you are testing and the amount of traffic your website receives.

- Importance: Determining the appropriate testing duration is essential to collect a sufficient amount of data to make informed decisions. A test that's too short may lead to inconclusive results, while a test that's too long can delay the implementation of improvements.

Sample Size

- Definition: Sample size refers to the number of visitors or users you need to include in your A/B test to obtain statistically significant results.

- Importance: The sample size is critical for the reliability of your test results. A small sample size can lead to unreliable results, while a large sample size can increase the accuracy of your findings.

Note: I truly believe that a mobile first design approach is a vitally key to the success of any web design project, so start your testing iterations with mobile devices first.

A/B Testing Process

The A/B testing process in web design typically involves the following steps:

Identifying Goals and Objectives

- Define Your Objectives: Clearly state what you aim to achieve with your A/B test. Is it to increase sign-ups, improve click-through rates, boost sales, or enhance the visual hierarchy of your website?

- Select Metrics: Identify the key metrics you will use to measure success, such as conversion rate, bounce rate, or engagement time.

Generating Hypotheses

- Analyze the Current Design: Examine your current web design and identify areas that may be improved. Do you need to improve the navigation menus, the layout structure, or the way content is organized on your website?

- Formulate Hypotheses: Based on your analysis, create hypotheses that suggest specific changes to your design that you believe will lead to improvements in the chosen metrics.

Creating Variations

- Design Versions A and B: Develop the control version (A) and the variant version (B) of your web page or design element. Ensure that the variations align with your hypotheses.

- Implement Changes: Make the design changes as per your hypotheses. These changes can include alterations to layout, colors, text, images, or any other relevant elements.

Randomization and Testing

- Random Assignment: Use a random assignment process to direct users to either Version A or Version B. This ensures that each user has an equal chance of encountering either variation.

- Run the Test: Launch your A/B test and monitor user interactions. Make sure that data is collected accurately, and no external factors influence the results.

Data Collection and Analysis

- Gather Data: Collect data on user behavior and interactions with both Version A and Version B. This data includes metrics like conversion rates, click-through rates, and bounce rates.

- Statistical Analysis: Analyze the data using statistical methods to determine which version performs better. Tools like t-tests and chi-squared tests are commonly used for this purpose.

- Draw Conclusions: Based on the analysis, conclude whether the variant (Version B) outperforms the control (Version A) and whether the changes you implemented are effective.

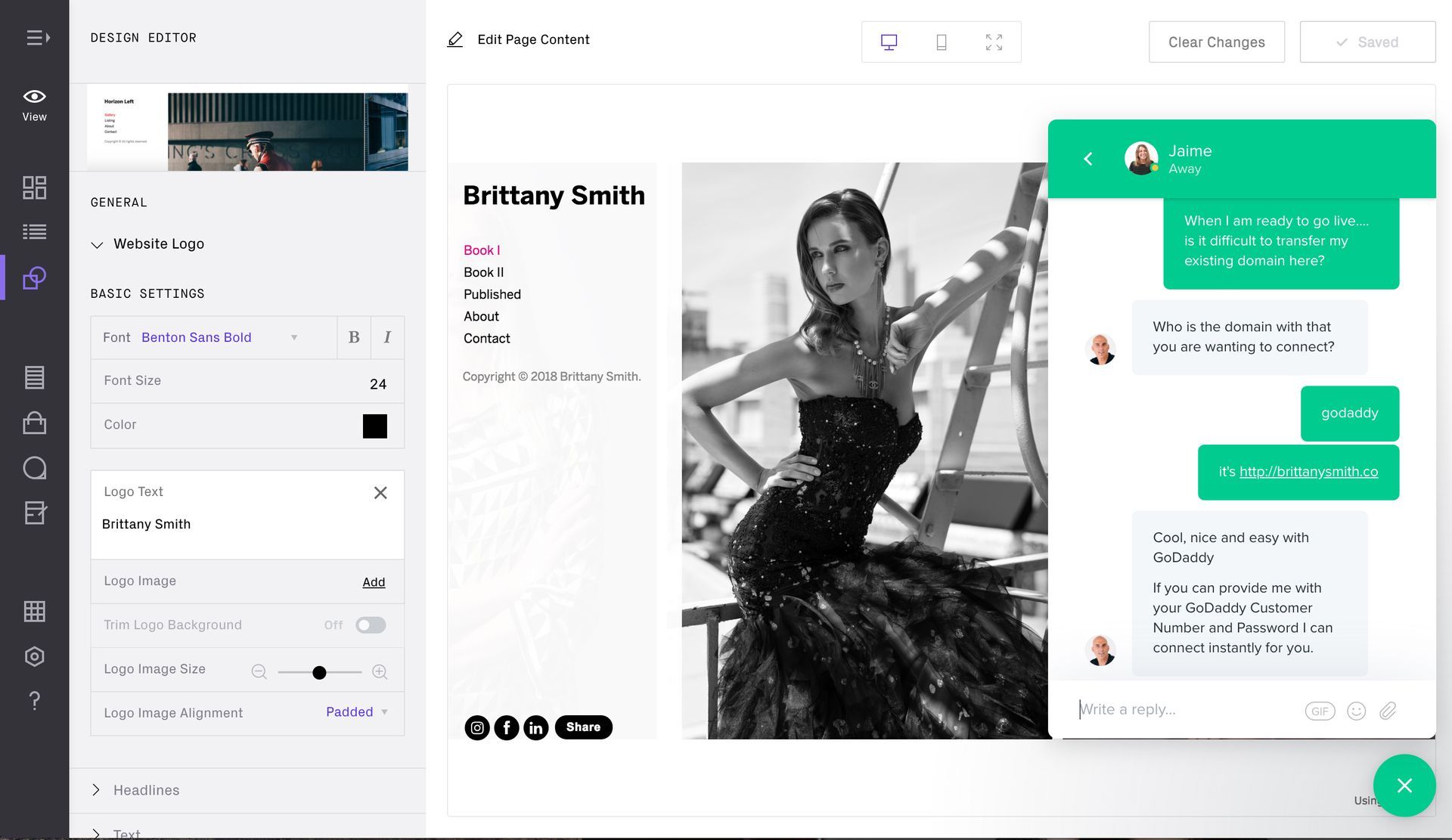

Tools and Software for A/B Testing

A/B testing in web design can be complex, and it often requires the use of specialized tools and software to effectively conduct tests and analyze results. Some popular tools and software for A/B testing include:

- Google Optimize: A free tool that allows you to create and run A/B tests, as well as multivariate tests. It integrates seamlessly with Google Analytics for data analysis.

- Optimizely: A comprehensive A/B testing platform that offers a visual editor for making design changes and powerful analytics for in-depth insights.

- VWO (Visual Website Optimizer): VWO is known for its user-friendly interface and offers features like split URL testing and multivariate testing.

- Unbounce: Primarily designed for creating and testing landing pages, Unbounce provides an easy way to build, publish, and A/B test landing page variations.

- Crazy Egg: Offers heatmaps, scrollmaps, and other visualization tools to help you understand user behavior and optimize your web design.

- Split.io: A feature flagging and experimentation platform that allows for A/B testing and feature rollouts. It's suitable for web and mobile applications.

- Convert.com: Offers A/B and multivariate testing, personalization, and experimentation capabilities for optimizing web designs.

- Adobe Target: Part of the Adobe Marketing Cloud, Adobe Target provides A/B testing and personalization features for optimizing user experiences.

- Hotjar: Provides heatmaps, session recordings, and survey tools to understand user behavior and gather feedback for design improvements.

It's essential to choose the right tool based on your specific needs, budget, and the complexity of your A/B testing requirements.

Best Practices for A/B Testing in Web Design

Before you begin A/B testing, you may want to familiarize your self with web design psychology and web design branding practices. Doing this will give you a deeper level of context to work from when you create the A/B testing models and it will help save you time.

To ensure the success of your A/B testing efforts in web design, consider the following best practices:

Setting Clear Objectives

- Define Specific Goals: Clearly articulate what you want to achieve with your A/B test. Are you looking to increase conversions, reduce bounce rates, or improve engagement metrics?

- Focus on One Objective: It's often best to concentrate on a single primary objective per A/B test. Testing too many changes at once can make it difficult to isolate the cause of performance variations.

- Use Relevant Metrics: Choose metrics that are directly related to your objectives. For example, if your goal is to increase sales, track metrics like add-to-cart rates and purchase completion rates.

Collecting Reliable Data

- Sufficient Sample Size: Ensure that your sample size is large enough to generate statistically significant results. Smaller sample sizes may lead to inconclusive findings.

- Randomize and Segment: Use randomization to assign users to different variations, and segment your data to understand how different user groups interact with your designs.

- Monitor External Factors: Be aware of external factors that could influence your results, such as seasonal trends, web design trends, marketing campaigns, or technical issues.

- Avoid Bias: Minimize bias by conducting tests without revealing which variation is the control and which is the variant to the individuals involved in the test.

Avoiding Common Pitfalls

- Avoid Early Conclusion: Do not end the test prematurely based on early results. Some fluctuations are common, and it's essential to gather sufficient data for reliable conclusions.

- Account for Seasonality: Take into account seasonal variations in user behavior when interpreting results.

- Analyze User Segments: Segment your data to understand how different user groups respond to variations, as this can reveal valuable insights.

- Iterative Testing: Continue to A/B test iteratively, making incremental changes to your web design to achieve ongoing improvements.

- Learn from Failed Tests: Even unsuccessful A/B tests provide valuable insights. Analyze why the test didn't yield the expected results and use that knowledge for future tests.

Case Studies

Let's look at a few example web design case studies of successful A/B testing to illustrate the impact it can have on user experience and business outcomes.

Booking.com

- Objective: Booking.com wanted to increase hotel bookings on its platform.

- Hypothesis: The team hypothesized that changing the color of the call-to-action (CTA) button from green to red would increase the number of bookings.

- Variation: Version A featured a green CTA button, while Version B had a red CTA button.

- Results: The A/B test showed that Version B (red CTA) had a 32% higher conversion rate, leading to a significant increase in hotel bookings.

HubSpot

- Objective: HubSpot aimed to boost the number of free trial sign-ups for its marketing software.

- Hypothesis: The hypothesis was that simplifying the sign-up form by reducing the number of fields would encourage more users to complete the form and start a free trial.

- Variation: Version A had a traditional sign-up form with multiple fields, while Version B had a simplified form with fewer fields.

- Results: The A/B test revealed that Version B (simplified form) led to a 20% increase in free trial sign-ups.

Mozilla Firefox

- Objective: Mozilla Firefox wanted to improve user engagement and retention for its browser.

- Hypothesis: The hypothesis was that users who had a more personalized and customizable start page would be more likely to continue using the browser.

- Variation: Version A featured the existing start page, while Version B introduced a more customizable start page with widgets and options.

- Results: The A/B test showed that users who experienced Version B (customizable start page) had a 15% higher retention rate, indicating a better user experience.

These case studies demonstrate how A/B testing can lead to significant improvements in user experience and help achieve specific business objectives. Whether it's increasing conversions, simplifying user interactions, or enhancing personalization, A/B testing can be a valuable tool for web designers and marketers.